Musical ML at NIME2019

06 Sep '19

I was really excited to be have several submissions accepted at NIME 2019 in Porto Alegre, among several more from the RITMO centre of excellence at UiO. It was really amazing to be at the first NIME in South America and to meet many wonderful musicians and researchers from Brazil and the region.

Workshop in Predictive NIMEs

The first event for me was presenting my workshop in Predictive NIMEs on the first day of the conference. This was in tutorial format and broadly followed the presentations and walkthroughs I’ve been developing on creativeprediction.xyz. I ended up a bit nervous about it because it was fully subscribed (we had to find some extra chairs) and the attendees included some of my favourite NIME contributors — this just shows that there is certainly an appetite in music technology to explore new ways to use machine learning and particularly deep neural networks.

The best part of this workshop was working with a group of attendees to get my IMPS system up and running. I know at least one or two are now starting to use this in new projects!

If you’re interested in following the following the workshop materials, they’re online here.

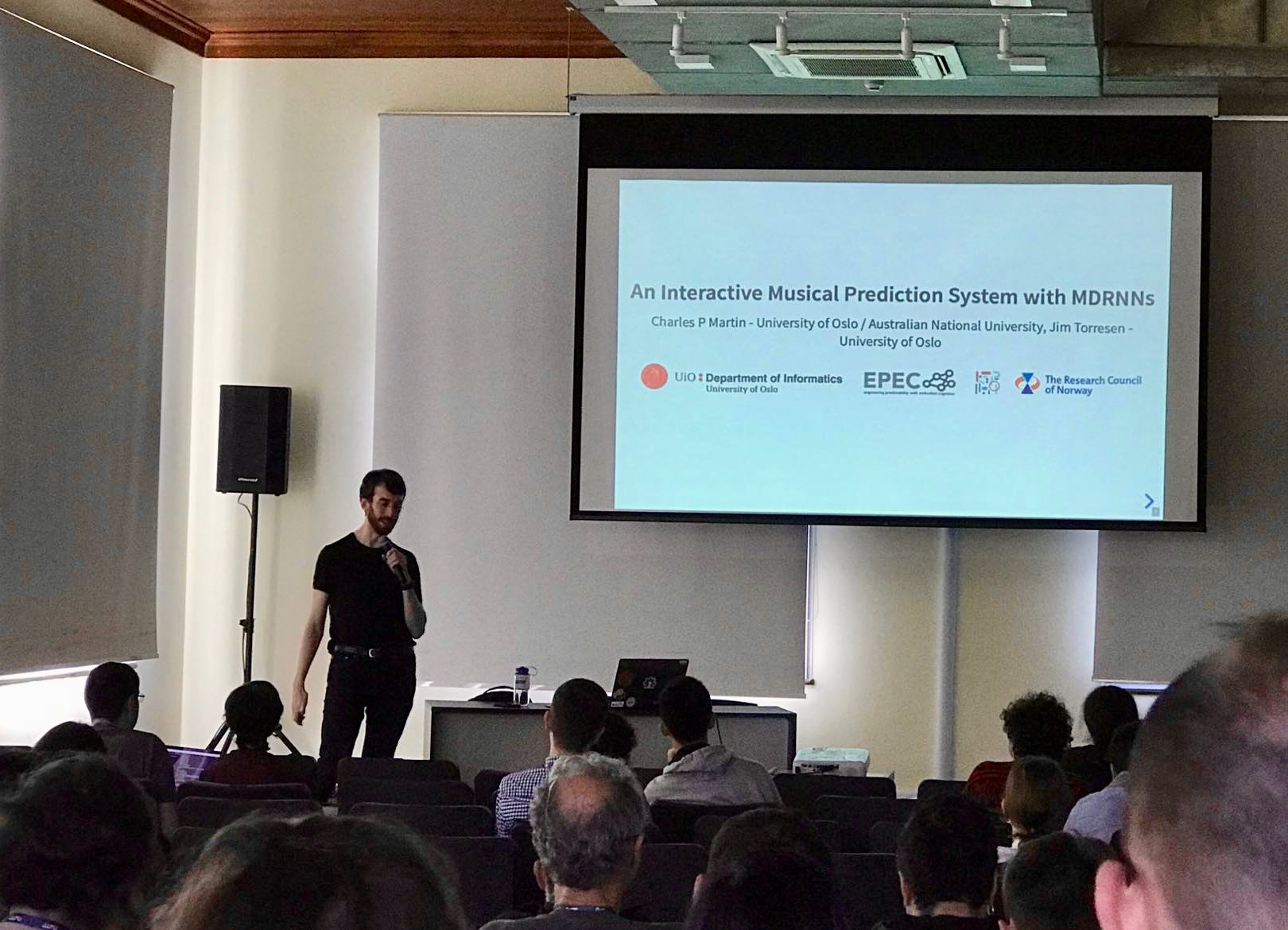

Interactive Musical Prediction System

This paper was a bit of a reveal of the tech I’ve been working on throughout my postdoc in the EPEC project at UiO.

IMPS is a complete system for applying an RNN to predicting musical gesture data in a NIME or other music tech system. The IMPS system consists of an OSC server that receives gestural data and can predict future data using a mixture density recurrent neural network. Rather than using a big dataset, this system is designed to allow musicians and makers to record their own data, train a small MDRNN and start using it as soon as possible. You can use however many dimensions of gestural data you like, and it predicts future events in absolute time (i.e., a number of seconds). It’s easy to connect to environments like Max, Pd, and Processing and even works (kinda) on very small datasets, as shown in the workshop above!

The idea of the paper was to introduce IMPS, and show that it’s practical for it to train and predict data for typical NIME-tasks. We show that it’s even practical to run on a Raspberry Pi, as well as train the RNN on a typical laptop (no big GPU workstation required).

I had a great response to this work; I think the NIME community really resonates with the idea of reusable tools so hopefully a few of my colleagues try IMPS out in their own projects!

@inproceedings{Martin2019,

author = {Martin, Charles Patrick and Torresen, Jim},

title = {An Interactive Musical Prediction System with Mixture Density Recurrent Neural Networks},

pages = {260--265},

booktitle = {Proceedings of the International Conference on New Interfaces for Musical Expression},

editor = {Queiroz, Marcelo and Sedó, Anna Xambó},

year = {2019},

month = jun,

publisher = {UFRGS},

address = {Porto Alegre, Brazil},

issn = {2220-4806},

url = {http://www.nime.org/proceedings/2019/nime2019_paper050.pdf}

}

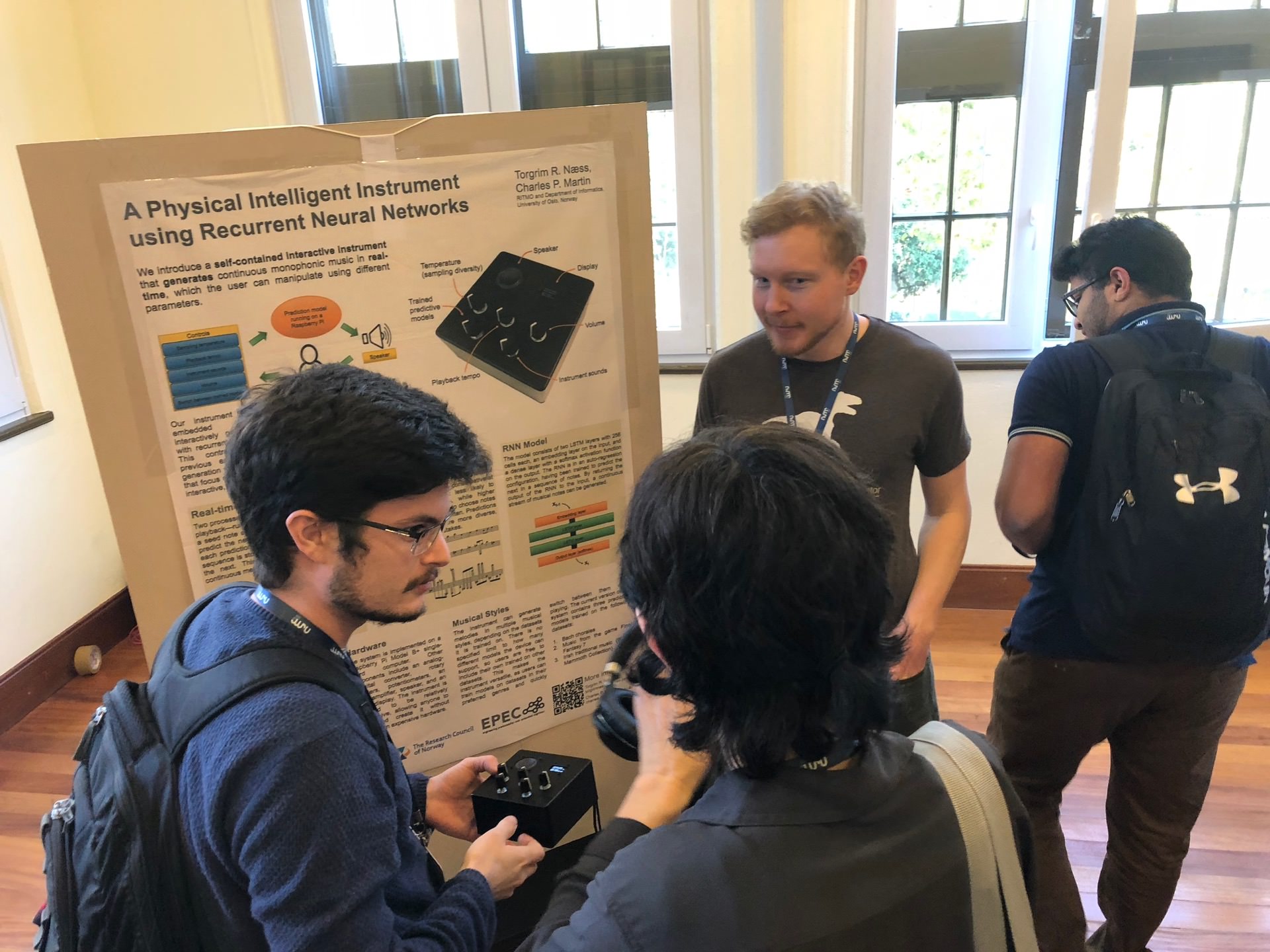

Physical Intelligent Instrument

This short paper came from Torgrim Næss’ master’s project (now completed!), and described his self-contained RNN music maker. This little box contains a Raspberry Pi, running a melody-generating deep learning model that plays an endless stream of AI music. There are knobs to control the volume, tempo, software synth instrument used to play the notes and two ML-specific knobs: one that controls the “temperature” or sampling diversity, and another to switch between ML models. He included a Bach model, one from a large web-sourced MIDI dataset, and one trained just on Final Fantasy 7 soundtracks.

In initial tests, my favourite combination was FF7 and an “orchestra hit” midi synth, but it was great to see NIME-attendees trying out lots of options and thinking of new ways to use this device in musical scenarios. Maybe a little RNN-box orchestra is in order?

Here’s a demo:

@inproceedings{Næss2019,

author = {Næss, Torgrim Rudland and Martin, Charles Patrick},

title = {A Physical Intelligent Instrument using Recurrent Neural Networks},

pages = {79--82},

booktitle = {Proceedings of the International Conference on New Interfaces for Musical Expression},

editor = {Queiroz, Marcelo and Sedó, Anna Xambó},

year = {2019},

month = jun,

publisher = {UFRGS},

address = {Porto Alegre, Brazil},

issn = {2220-4806},

url = {http://www.nime.org/proceedings/2019/nime2019_paper016.pdf}

}

Generating Harmony with LSTM Networks

Finally, one last paper from a team of students who developed an excellent piece of research on harmonised parts generated by sequence-to-sequence recurrent neural networks during our IN5490 course at UiO, and developed it into a great paper. This paper had a particularly nice introduction to RNN architectures for a music technology audience.

@inproceedings{Faitas2019,

author = {Faitas, Andrei and Baumann, Synne Engdahl and Næss, Torgrim Rudland and Torresen, Jim and Martin, Charles Patrick},

title = {Generating Convincing Harmony Parts with Simple Long Short-Term Memory Networks},

pages = {325--330},

booktitle = {Proceedings of the International Conference on New Interfaces for Musical Expression},

editor = {Queiroz, Marcelo and Sedó, Anna Xambó},

year = {2019},

month = jun,

publisher = {UFRGS},

address = {Porto Alegre, Brazil},

issn = {2220-4806},

url = {http://www.nime.org/proceedings/2019/nime2019_paper062.pdf}

}

Presentations at UFRGS

After NIME the team from UiO gave a few extra presentations at the Informatics Department at UFRGS, fun to hang out with Bruno in his lab and chat with the students! Here’s Synne and Andrei reprising their NIME presentation:

Shoutouts

A couple of shoutouts to my colleagues:

From the RITMO Centre, Cagri Erdem, Alexander Jensenius and Katja Schia had a paper and performance on their Vrengt music-dance performance research. And also from IN5490, Aline Weber, Lucas Alegre, Jim Torresen, and Bruno Castro da Silva had a great paper on parametrising melody generation with Perlin noise.